Friday, May 23, 2008

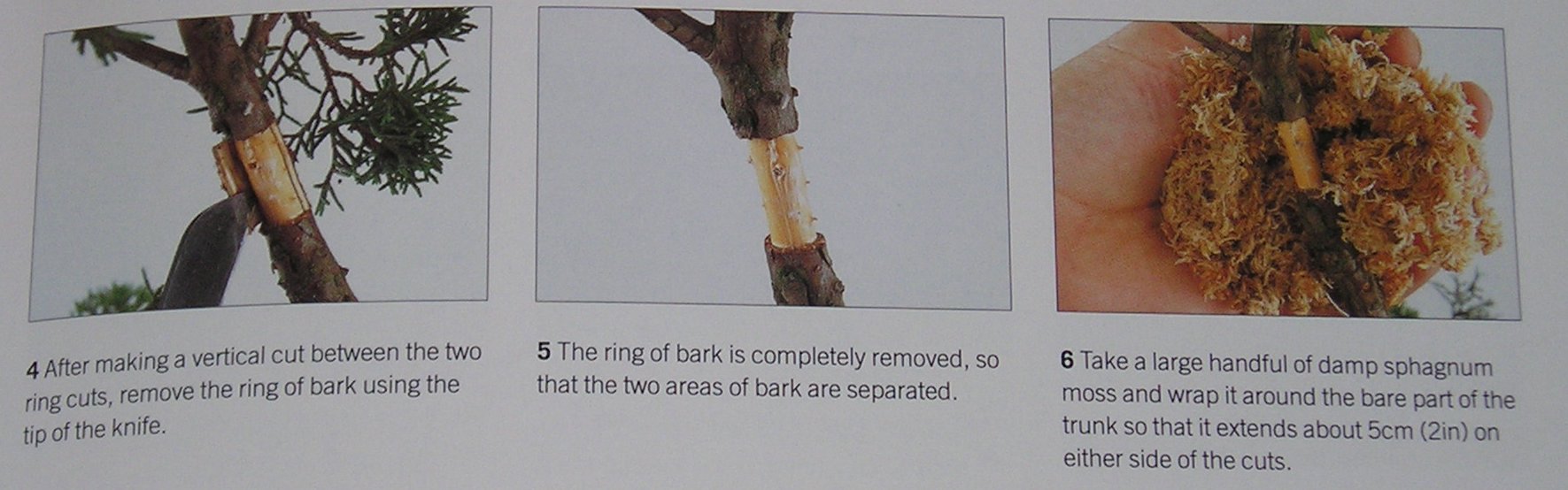

Okay, so gardening. I'll make it quick. I read in my bonsai book that if you have problems propagating from cuttings, you can grow roots on the original plant in a process called air-layering:

I tried that with my dwarf schefflera (at least I think that's what it is). Here's the parent plant (it's in a pot with 2 chillies and a mint):

But the air layer failed. No roots grew. And as you probably guessed I failed with cuttings, too. But I don't like giving up, so I stuck the cutting in some water:

It has done well. It's in a tall thin jar half full of water, in a pot that's backfilled with pebbles. This keeps the rooting part warm, which I understand is important. It is now finally growing roots:

By the way, there was no sign of those roots when it was being air layered - they've all popped out since it has been in the water. I had it outside, but a couple of the roots died and I decided it was too cold out there so I bought it inside. And today I noticed there are lost more roots starting to stick out through the bark (from cracks that run in the direction of the stem, not from those popcorn-looking bits).

So here's my conclusion about air layering schefflera (umbrella trees). There's nothing to be gained from cutting a ring of bark off. The roots don't grow out of the cut bark - they just grow out of normal bark. In fact, they grow out of the brown ~2mm long cracks you can see on every part of every branch.

In summary:

- If you're going to air layer a schefflera, the important points are making sure it's very wet and not cutting through the bark (though it may turn out that cutting through the bark encourages root production)

- To strike a cutting, focus on keeping the rooting area (which is the bark) wet

- But at the same time, remember that the cutting will drink through the bottom of the cutting, so make sure it is a clean cut. It easily rots. Mine did, and I just cut an extra 5mm of bark off to keep it healthy.

Wednesday, May 21, 2008

- has a link to a known licence URL

- has a link that has rel="license" attribute in the tag, and a legal expert confirms that the link target is a licence URL

- has a meta tag with name="dc:rights" content="URL", and an expert confirms that the URL is a licence

- has embedded or external RDF+XML with license rdf:resource="URL"

- natural language, such as "This web page is licensed with a Creative Commons Attribution 1.0 Australia License"

- system is told by someone it trusts

- URL is in rel="license" link tag, expert confirms

- URL is in meta name="dc:rights" tag, expert confirms

- URL is in RDF license tag

- page contains an exact copy of a known licence

- system is told by someone it trusts

Labels: ben, quantification

The pages were drawn randomly from the dataset, though I'm not sure that my randomisation is great - I'll look into that. As I said in a previous post, the data aims to be a broad crawl of Australian sites, but it's neither 100% complete nor 100% accurate about sites being Australian.

By my calculations, if I were to run my analysis on the whole dataset, I'd expect to find approximately 1.3 million pages using rel="licence". But keep in mind that I'm not only running the analysis over three years of data, but that data also sometimes includes the same page more than once for a given year/crawl, though much more rarely than, say, the Wayback Machine does.

And of course, this statistic says nothing about open content licensing. I'm sure, as in I know, there are lots more pages out there that don't use rel="license".

(Tech note: when doing this kind of analysis, there's a race between I/O and processor time, and ideally they're both maxed out. Over last night's analysis, the CPU load - for the last 15 minutes at least, but I think that's representative - was 58%, suggesting that I/O is so far the limiting factor.)

Labels: ben, quantification, research

Monday, May 19, 2008

First, the National Library's crawls were outsourced to the Internet Archive, which is a good thing - it's been done well, the data is in a well defined format (a few sharp edges, but pretty good), and there's a decent knowledge-base out there already for accessing this data.

Now, there are two ways that IA chooses to include a page as Australian:

- domain name ends in '.au' (e.g. all web pages on the unsw.edu.au domain)

- IP address is registered as Australian in a geolocation database

Actually, there is a third kind of page in the crawls. The crawls were done with a setting that included some pages linked directly from Australian pages (example: slashdot.org), though not sub-pages of these. I'll have to address this, and I can think of a few ways:

- Do a bit of geolocation myself

- Exclude pages where sibling pages aren't in the crawl

- Don't make national-oriented conclusions, or when I do, restrict to the .au domains

- Argue that it's a small portion so don't worry about it

(Thanks to Alex Osborne and Paul Koerbin from the National Library for detailing the specifics for me)

Labels: ben

Possible outcomes from this include:

- Some meaningful consideration of how many web pages, on average, come about from a single decision to use a licence. For example, if you licence your blog and put a licence statement into your blog template, that would be one decision to use the licence, arguably one licensed work (your blog), but actually 192 web pages (with permalinks and all). I've got a few ideas about how to measure this, which I can go in to more depth about.

- How much of the Australian web is licensed, both proportionally (one page in X, one web site in X, or one 'document' in X), and in absolute terms (Y web pages, Y web sites, Y 'documents').

- Comparison of my results to proprietary search-engine based answers to the same question, to put my results in context.

- Comparison of various licensing mechanisms, including but not limited to: hyperlinks, natural language statements, dc rights in tags, rdf+xml.

- Comparison of use of various licences, and licensing elements.

- Changes in the answers to these questions over time.

Labels: ben, licensing, open content, quantification, research

Thursday, May 15, 2008

The recently released Federal Budget held at least one item of interest for the nation’s starving artists: a planned $1.5 million to be spent on establishing a resale royalty scheme. Such a scheme has long been advocated for (by Matthew Rimmer and the Arts Law Centre, amongst many others), in order to bring

The move to ensure that visual artists benefit from appreciation in the value of their works has been seen as particularly significant for Indigenous artists. Considering the current market for Australian Indigenous artists’ works, a right to resale royalties would translate to a not-insubstantial extra income for some better-known, sought-after artists.

The interesting part will be watching how the scheme develops – at the moment, tenders to administer the scheme should be sought in the later part of this year. For example, nothing appears to have been decided about the term that the right will operate for – that is, whether it will operate on the basis of life + 70 years, or how payments to estates of deceased artists might be managed.

At the same time as K-Rudd gives, however, he also taketh away.

Funding for some other arts sectors has, of course, been slashed – for example, the regional arts fund. So, if you’re an incredibly talented, established (and probably, quite old) artist, whose work has had the benefits of time and hype to appreciate (and which actually sells)– lucky you. That royalty cheque may be in the mail sooner than you thought.

For all those struggling unknowns out there, well, there’s every chance that the program you were relying on for a kick start may be pulled.

Looks like that starving artist cliché will be around (and pulling you a beer) for a while yet.

Labels: arts, copyright, sophia

Tuesday, May 13, 2008

The music industry is once again raising its shrill voice against ‘piracy’, running a campaign featuring a video starring well-known Australian musos. Artists including members of Silverchair, the Veronicas, and Jimmy Barnes discuss the ins and outs of being a musician, under the tag line: “just paying the rent", "not living like a rock star".

Musos who might actually be having difficulty paying their rent were notably absent from the credits – presumably they couldn’t get someone else to fill in for them down at the café that day, or were too busy uploading their latest single onto Trig.

Unsurprisingly, the campaign has become controversial, inciting much media commentary (check some out here, and here) and raising the ire of Frenzal Rhomb guitarist Lindsay McDougall. McDougall originally appeared in the video, and now says it was on false pretences. According to Crikey, he was

“furious at being ‘lumped in with this witch hunt’ and that he had been ‘completely taken out of context and defamed’ by the Australian music industry, which funded the video. He said he was told the 10-minute film, which is being distributed for free to all high schools in

The original clip including his input has been removed, but is archived.

And what would a debate involving artists’ rights be without a manifesto? ‘Tune out’ has obligingly penned one in response to the In Tune campaign.

Perhaps in an effort to appear marginally down-with-the-kids, the industry campaign page has this pseudo-licence, below, in its footer. It is in some ways similar to a ‘Free for Education’- style licence, and/or may invite the false expectation that they support a sort of personal "fair use" model in some circumstances:

“In Tune was produced with the support of the Australian music industry.

In Tune can be used for personal use and as a free non-commercial

educational resource. For more info, email: intunedoco@gmail.com"

So, there you have it – the kid drummer from Operator Please thinks MySpace is pretty cool, Lindsay McDougall continues to stick it to the Man, and the Veronicas look flawless even when they’re concerned and slightly annoyed.

Thank goodness for free (for educational purposes only) online videos.

Thursday, May 01, 2008

I said I'd like to see an interface that (among other nice-to-haves) answers questions like "give me everything you've got from cyberlawcentre.org/unlocking-ip", and it turns out that that's actually possible with The Wayback Machine. Not in a single request, that I know of, but with this (simple old HTTP) request: http://web.archive.org/web/*xm_/www.cyberlawcentre.org/unlocking-ip/*, you can get a list of all URLs under cyberlawcentre.org/unlocking-ip, and then if were to want to you could do another HTTP request for each URL.

Pretty cool actually, thanks Alex.

Now I wonder how big you can scale those requests up to... I wonder what happens if you ask for www.*? Or (what the heck, someone has to say it) just '*'. I guess you'd probably break the Internet...